Inspection does not improve the quality, nor guarantee quality. Inspection is too late. The quality, good or bad, is already in the product. Quality cannot be inspected into a product or service; it must be built into it.”

—W. Edwards Deming

Built-In Quality

Built-In Quality practices ensure that each Solution element, at every increment, meets appropriate quality standards throughout development.The Enterprise’s ability to deliver new functionality with the shortest sustainable lead time, and adapt to rapidly changing business environments, depends on Solution quality. So, it should be no surprise that built-in quality is one of the SAFe Core Values as well as a principle of the Agile Manifesto, “Continuous attention to technical excellence and good design enhances agility” [1]. Built-in quality is also a core principle of the Lean-Agile Mindset, helping to avoid the cost of delays (CoDs) associated with recalls, rework, and fixing defects. SAFe’s built-in quality philosophy applies systems thinking to optimize the whole system, ensuring a fast flow across the entire Development Value Stream, and makes quality everyone’s job.

All teams including software, hardware, operations, product marketing, legal, security, compliance, etc. share the goals and principles of built-in quality. However, the practices will vary by discipline because their work products vary.

Details

To support Business Agility, enterprises must continually respond to market changes and the quality of the work products that drive business value directly determine how quickly teams can deliver. The work products that drive business vary by domain but include software, hardware designs, scripts, configurations, images, marketing material, contracts, and many others. Products built on stable technical foundations that follow standards are easier to change and adapt. This is even more critical for large solutions, as the cumulative effect of even minor defects and wrong assumptions can create unacceptable consequences.

Building high-quality requires ongoing training and commitment, but the business benefits warrant the investment:

- Higher customer satisfaction

- Improved velocity and delivery predictability

- Better system performance

- Improved ability to innovate, scale, and meet compliance requirements

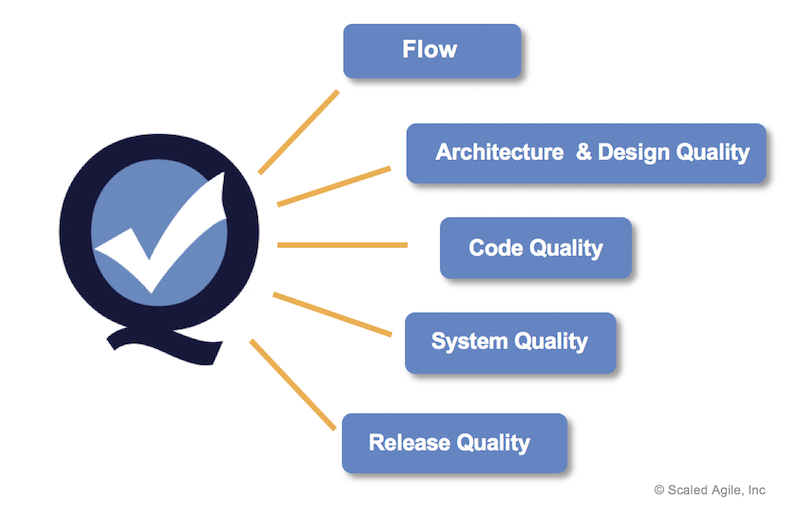

The remainder of this article describes SAFe’s five aspects of built-in quality for technology-focused teams and work products (Figure 1). Business-focused teams can use them as a reference when applying built-in quality practices to their work products. Establishing flow is fundamental to all teams as it describes how to remove the errors, rework, and other waste that slows throughput. The remaining four describe quality practices that can be adapted to different domains including test-first, automation, and exploring alternatives with set-based design. The Built-in Quality dimension of Team and Technical Agility also contains quality guidance that is generally applicable to all teams – pairing, collective ownership, standards, automation, and definition of done.

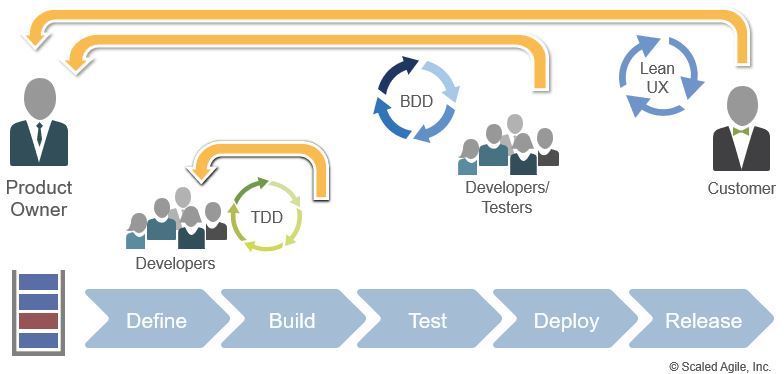

Achieving Flow with Test-First and a Continuous Delivery Pipeline

Agile teams operate in a fast, flow-based system to quickly develop and release high-quality business capabilities. Instead of performing most testing at the end, Agile teams define and execute many tests early, often, and at multiple levels. Tests are defined for code changes using Test-Driven Development (TDD) [2], Story, Feature, and Capability acceptance criteria using Behavior-Driven Development (BDD) [3], and Feature benefit hypothesis using Lean-UX [4] (Figure 2). Building in quality ensures that Agile development’s frequent changes do not introduce new errors and enables fast, reliable execution.

Think Test-First

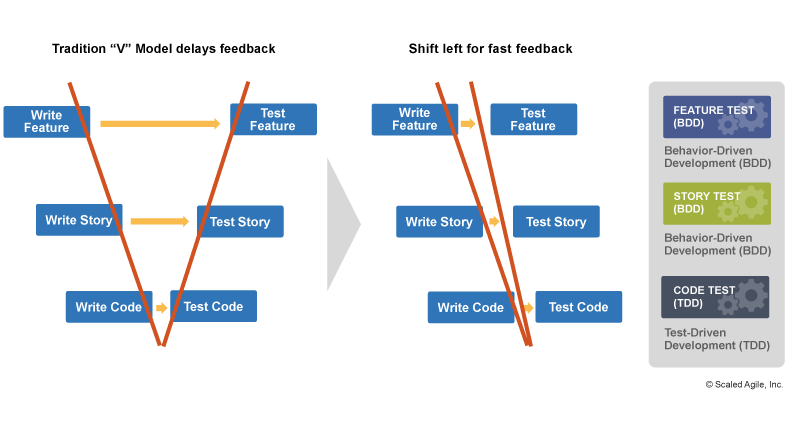

Agile teams generate tests for everything—Features, Stories, and code—ideally before (or at the same time) the item is created, or test-first. Test-first applies to both functional requirements (Feature and Stories) as well as non-functional requirements (NFRs) for performance, reliability, etc. A test-first approach collapses the traditional “V-Model” by creating tests earlier in the development cycle (Figure 3).

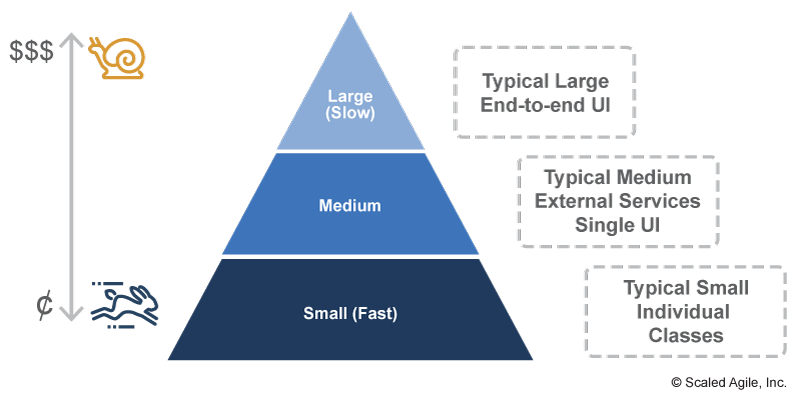

To support fast flow, tests need to run quickly, and teams strive to automate them. Since larger, UI-based, end-to-end tests run much slower than small, automated tests, we desire a balanced testing portfolio with many small fast tests and fewer large slow tests. Test-first thinking creates a balanced Testing Pyramid (Figure 4). Unfortunately, many organizations testing portfolios are unbalanced, with too many large, slow, expensive tests, and too few small, quick, cheap tests. By building large amounts of code and Story-level tests, organizations reduce their reliance on slower, end-to-end, expensive tests.

Build a Continuous Delivery Pipeline

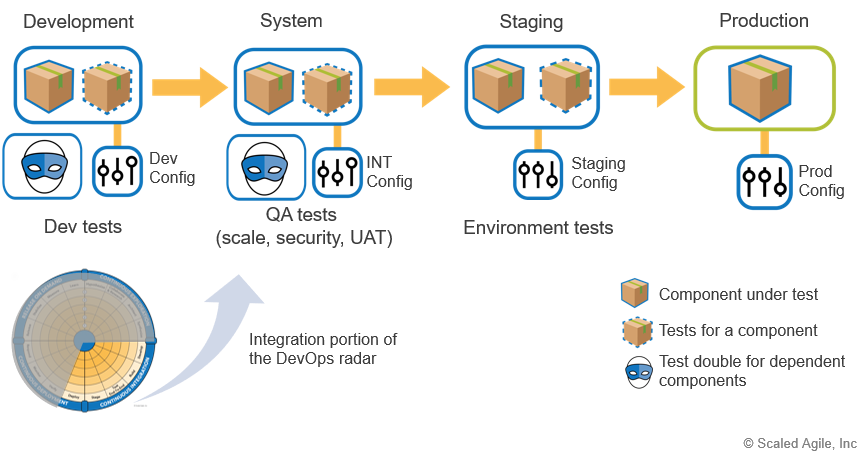

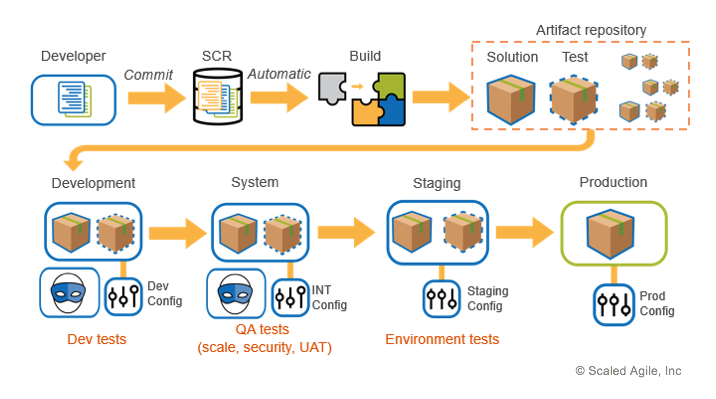

These, and other, built-in quality practices help create a Continuous Delivery Pipeline (CDP) and the ability to Release on Demand. Figure 5 illustrates the Continuous Integration portion of the CDP and shows how changes built into components are tested across multiple environments before arriving in production. ‘Test doubles’ speed testing by substituting slow or expensive components (e.g., enterprise database) with faster, cheaper proxies (e.g., in-memory database proxy).

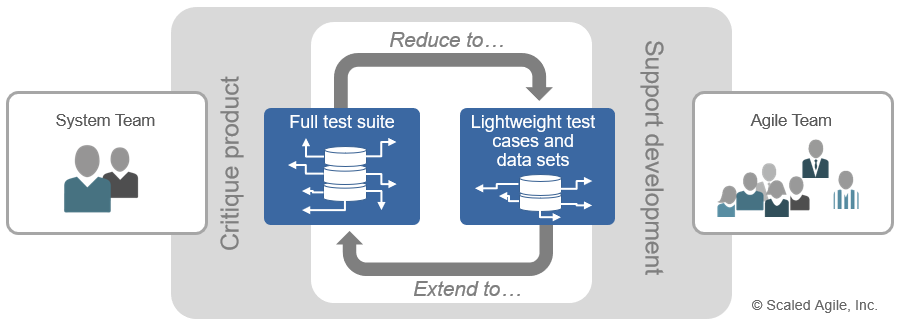

Accelerate Feedback with Reduced Test Suites

As tests can grow over time, they delay Agile teams. Complete test suites can take significant time to set up and execute. Teams may create reduced test suites and test data (a ‘smoke test’) to ensure the most important functionality before moving through other pipeline stages. They collaborate with the System Team to balance speed and quality and help ensure flow (see Figure 6).

Achieving Architecture and Design Quality

A system’s architecture and design ultimately determine how well a system can support current and future business needs. Quality in architecture and design make future requirements easier to implement, systems easier to test, and helps to satisfy NFRs.

Support Future Business Needs

As requirements evolve based on market changes, development discoveries, and other reasons, architectures and designs must also evolve. Traditional processes that force early decisions can result in suboptimal choices that cause inefficiencies that slow flow and/or cause later rework. Identifying the best decision requires knowledge gained through experimentation, modeling, simulation, prototyping, and other learning activities. It also requires a Set-Based Design approach that evaluates multiple alternatives to arrive at the best decision. Once determined, developers use the Architecture Runway to implement the final decision. Agile Architecture provides intentional guidance for inter-team design and implementation synchronization.

Design for Quality

As a system’s requirements evolve, its design must also evolve to support them. Low-quality designs are difficult to understand and modify, which typically result in slower delivery with more defects. Applying good coupling/cohesion and appropriate abstraction/encapsulation make implementations easier to understand and modify. SOLID principles [5] make systems flexible so they can more easily support new requirements.

Design Patterns [6] describe well-known ways to support these principles and provide a common language to ease understanding and readability. Naming an element ‘Factory’ or ‘Service’ quickly denotes its intent within the broader system. Using a set-based design explores multiple solutions to arrive at the best design choice, not the first choice. See Design Quality, part of SAFe’s Built-in Quality guidance, for more details.

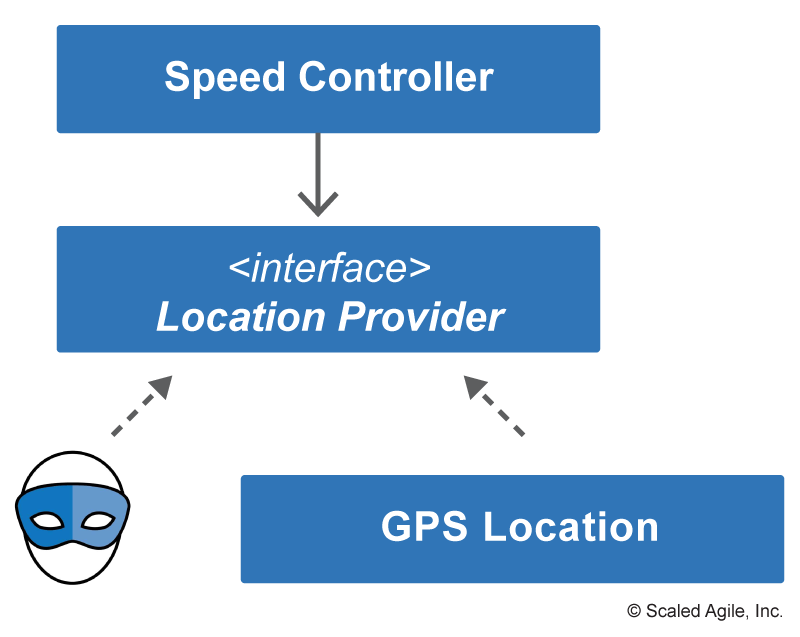

Architecting and Designing to Ease Testing

Architecture and design also determine a system’s testability. Modular components that communicate through well-defined interfaces create seams [7] that allow testers and developers to substitute expensive or slow components with test doubles. As an example, Figure 7 shows a Speed Controller component needing the current vehicle location from a GPS Location component to adjust its speed. Testing the Speed Controller with GPS Location requires the associated GPS hardware and signal generators to replicate GPS satellites. Replacing that complexity with a test double decreases the time and effort to develop and test the Speed Controller, or any other component that interfaces with GPS Location.

Applying Design Quality in Cyber-Physical Systems

These design principles also apply to cyber-physical systems. Engineers in many disciplines use modeling and simulation to gain design knowledge. For example, integrated circuit (IC) design technologies (VHDL, Verilog) are software-like and share the same benefits from these design characteristics and SOLID principles [8]. Hardware designs also apply the notion of test doubles through simulations and models or they provide a wood prototype before cutting metal.

This often requires a mindset change. Like software, hardware will also change over the system’s lifecycle. Instead of optimizing to complete a design for the current need, planning for future changes by building in quality provides better long-term outcomes.

Achieving Code Quality

All system capabilities are ultimately executed by the code (or components) of a system. The speed and ease to add new capabilities depend on how quickly and reliably developers can modify it. Inspired in part by Extreme Programming (XP) [9], several practices are listed here.

Unit Testing and Test-Driven Development

The unit testing practice breaks the code into parts and ensures that each part has automated tests to exercise it. These tests run automatically after each change and allow developers to make fast changes, confident that the modification won’t break another part of the system. Tests also serve as documentation and are executable examples of interactions with a component’s interface to show how that component should be used.

Test-Driven Development (TDD) guides the creation of unit tests by specifying the test for a change before creating it. This forces developers to think more broadly about the problem, including the edge cases and boundary conditions before implementation. Better understanding results in faster development with fewer errors and less rework.

Pair Work

Pairing has two developers work the same change at the same workstation. One serves as the driver writing the code while the other as the navigator providing real-time review and feedback. Developers switch roles frequently. Pairing creates and maintains quality as the code will contain the shared knowledge, perspectives, and best practices from each member. It also raises and broadens the skillset for the entire team as teammates learn from each other.

Collective Ownership and Coding Standards

Collective ownership reduces dependencies between teams and ensures that any individual developer or team will not block the fast flow of value delivery. Any individual can add functionality, fix errors, improve designs, or refactor. Because the code is not owned by one team or individual, supporting coding standards encourages consistency so that everyone can understand and maintain the quality of each component.

Applying Code Quality in Cyber-Physical Systems

While not all hardware design has ‘code,’ the creation of physical artifacts is a collaborative process and can benefit from these practices. Computer Aided Design (CAD) tools used in hardware development provide ‘unit tests’ in the form of assertions for electronic design and then simulations and analysis in mechanical design. Pairing, collective ownership, and coding standards can produce similar benefits to create designs that are more easily maintained and modified.

Some hardware design technologies are very similar to code (e.g., VHDL) with, clearly defined inputs and outputs that are ideal for practices like TDD [8].

Achieving System Quality

While code and design quality ensure that system artifacts can be easily understood and changed, system quality confirms that the systems operate as expected and that everyone is aligned on what changes to make. Tips for achieving system quality are highlighted below.

Create Alignment to Achieve Fast Flow

Alignment and shared understanding reduce developer delays and rework, enabling fast flow. Behavior-Driven Development (BDD) defines a collaborative practice where the Product Owner and team members agree on the precise behavior for a story or feature. Applying BDD helps developers build the right behavior the first time and reduces rework and errors. Model-Based Systems Engineering (MBSE) scales this alignment to the whole system. Through an analysis and synthesis process, MBSE provides a high-level, complete view of all the proposed functionality for a system, and how the system design realizes it.

Continuously Integrate the End-to-End Solution

Scaling agility results in many engineers making many small changes that must be continually checked for conflicts and errors. Continuous integration (CI) and continuous delivery (CD) practices provide developers with fast feedback (Figure 8). Each change is quickly built then integrated and tested at multiple levels, including the deployment environment. CI/CD automates the process to move changes across all stages and knows how to respond when a test fails. Quality tests for NFRs are also automated. While CI/CD strives to automate all tests, some functional (exploratory) and NFR (usability) testing can only be performed manually.

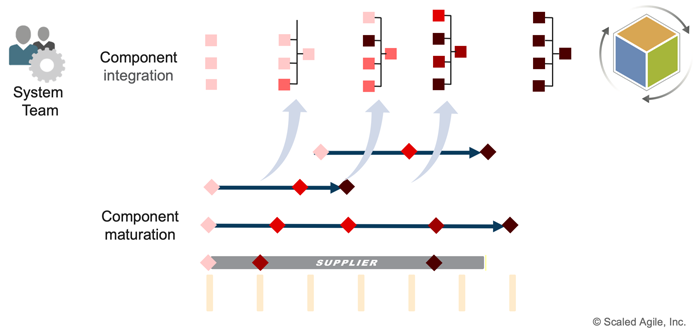

Applying System Quality in Cyber-Physical Systems

Cyber-physical systems can also support a fast-flow, CI/CD approach—even with a long lead time for physical parts. As stated earlier, simulations, models, previous hardware versions, and other proxies can substitute for the final system components. Figure 9 illustrates a system team providing a demonstrable platform to test incremental behavior by connecting these component proxies. As each component matures (shown by increasing redness), the end-to-end integration platform matures as well. With this approach, component teams become responsible for supporting both their part of the final solution as well as maturing the incremental, end-to-end testing platform.

Achieving Release Quality

Releasing allows the business to measure the effectiveness of a Feature’s benefit hypothesis. The faster an organization releases, the faster it learns and the more value it delivers. Modular architectures that define standard interfaces between components allow smaller, component-level changes to be released independently. Smaller changes allow faster, more frequent, less risky releases, but require an automated pipeline (shown in Figure 2) to ensure quality.

Unlike a traditional server infrastructure, an ‘immutable infrastructure’ does not allow changes to made manually and directly to production servers. Instead, changes are applied to server images, validated, and then launched to replace currently running servers. This approach creates more consistent, predictable releases. It also allows for automated recovery. If the operational environment detects a production error, it can roll back the release by simply launching the previous image to replace the erroneous one.

Supporting Compliance

For systems that must demonstrate objective evidence for compliance or audit, releasing has additional conditions. These organizations must prove that the system meets its intended purpose and has no unintended, harmful consequences. As described in the Compliance article, Lean Quality Management System (QMS) defines approved practices, policies, and procedures that support a Lean-Agile, flow-based, continuous integrate-deploy-release process.

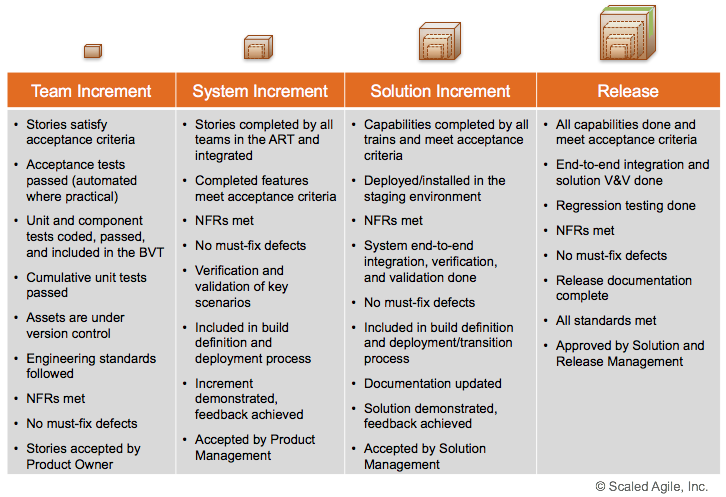

Scalable Definition of Done

Definition of Done is an important way of ensuring increment of value can be considered complete. The continuous development of incremental system functionality requires a scaled definition of done to ensure the right work is done at the right time, some early and some only for release. An example is shown in Table 1, but each team, train, and enterprise should build their own definition. While these may be different for each ART or team, they usually share a core set of items.

Achieving Release Quality in Cyber-Physical Systems

The sentiment for release quality is not to let changes lay idle, waiting to be integrated. Instead, integrate changes quickly and frequently through successively larger portions of the system until the change arrives in an environment for validation. Some cyber-physical systems may validate in the customer environment (e.g., over-the-air updates in vehicles). Others proxy that environment with one or more mockups that strive to gain early feedback, as shown previously in Figure 5. The end-to-end platform matures over time, providing higher levels of fidelity that enable earlier verification and validation (V&V) as well as compliance efforts. For many systems, this early V&V and compliance feedback are critical to understanding the ability to manufacture or release products.

Learn More

[1] Manifesto for Agile Software Development. www.AgileManifesto.org [2] Beck, Kent. Test-driven Development: By Example. Addison-Wesley, 2003. [3] Pugh, Ken. Lean-Agile Acceptance Test-Driven Development: Better Software Through Collaboration. Addison-Wesley, 2010. [4] Gothelf, Jeff, and Josh Seiden. Lean UX: Designing Great Products with Agile Teams. O’Reilly Media, 2016. [5] Martin, Robert. The Principles of OOD. http://butunclebob.com/ArticleS.UncleBob.PrinciplesOfOod [6] Gama, Erich, et al. Design Patterns: Elements of Reusable Object-Oriented Software. Addison-Wesley, 1994. [7] Feathers, Michael. Testing Effectively With Legacy Code. Prentice Hall, 2005. [8] Jasinski, Riocardo. Effective Coding with VHDL, Principles and Best Practice. The MIT Press, 2016. [9] Beck, Kent. Extreme Programming Explained: Embrace Change. Addison-Wesley, 1999.

Last updated: 10 February 2021