Continuous Delivery

By Scott Prugh

Chief Architect – CSG International

UPDATE: Oct 31, 2014: also check out this video on the same topic by Scott from DevOps Enterprise Summit 2014.

The evolution of Lean, Agile and XP practices manifested in the Scaled Agile Framework and other methods have revolutionized the way we specify, prioritize and deliver value through software. It is no longer debatable that these techniques deliver value more effectively than traditional waterfall techniques. The Lean Foundation of these practices forces us to holistically optimize the value-stream and continually remove wasteful work or work that creates delays. In many organizations, this optimization has not been taken far enough and the Define, Build, Test cycle ends at “Test”, resulting in a large downstream handoff to a set of Operations personnel for deployment. This creates risk and churn as well as enforcing role stove-piping and de-optimizing end to end efficiencies. This article will discuss techniques for combatting this problem in large enterprises, where role-preservation may outweigh the call for efficiency, by forming a “Shared Operations Team (SOT)” to support a Continuous Delivery Pipeline. Readers are assumed to have a high-level understanding of queues and handoff dysfunctions in software development (all queues are bad, large batches are bad). Familiarity with the SAFe Framework and Glossary of terms is also helpful.

How did we get here?

Development and Waterfall

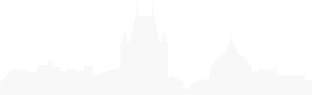

Traditional management practices in large organizations focused heavily on organizational stovepipes, role specialization and command and control. The common belief held that individual and specialized efficiency yielded the best gains over time. In software engineering we saw this taken to the extreme with the advent of Waterfall where every role (Requirements, Design, Development, and Testing) was farmed out to a specialized organization. The mantra was “Optimize by Role” not “Optimize the Whole”, as Figure 1 illustrates.

Skill Shapes

Role specific optimization not only de-optimizes the flow of value through a business process with large amounts of variability, it also de-optimizes each employee’s future potential and creates a mindset for “hiring for specialized, current skill” vs. “hiring for talent.” Role specific people that are deep in one area are often referred to as “I-Shaped” and struggle to handle multiple roles. New conventional best practice wisdom has focused on hiring “T-Shaped” people who are deep in one area, yet capable across a variety of areas. This adds great flexibility to the problems an organization can tackle. More recently, investment in “E-Shaped” people has become the ultimate goal (expertise, experience, execution, exploration), as Figure 2 illustrates.

The Move to Lean and Agile Development

Over the last decade, two significant changes have occurred that put pressure on these current organizational pictures and bring the inherent structural design into question: 1) adoption of Agile & Lean practices in software development and 2) commoditization of IT infrastructure via virtualization & “The Cloud” (infrastructure as code).

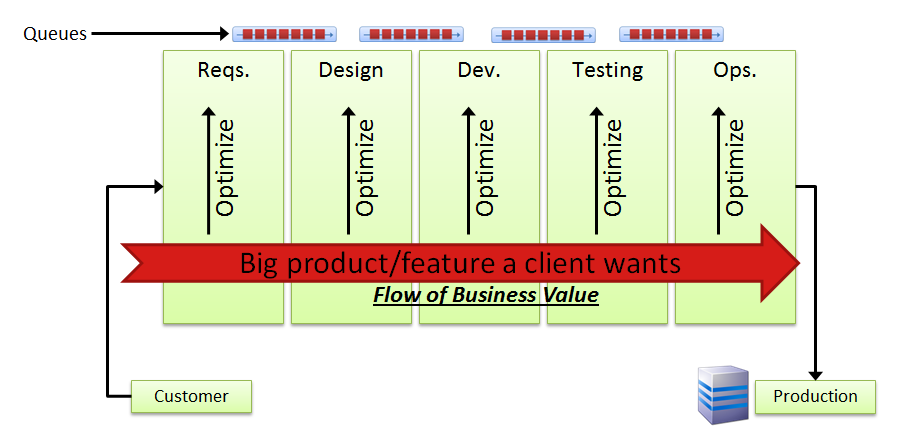

With the adoption of Agile & Lean, it became apparent that over-reliance on specialized roles de-optimized the flow of value and created constraints and delay. As software complexity grew and the demands increased, the traditional waterfall software pipeline broke down. The better product development shops began to understand that requirements and design could not be isolated from development. We also developed the understanding that testing was an integral part of the entire life cycle and could often affect the requirements, design and especially, how the software was developed. To gain the next level of efficiency the development stove-pipes between the roles needed to be broken down. This was accomplished by creating cross-functional, self-empowered Agile Teams as Figure 3 shows. Additional investments in Continuous Integration at the Team and Program Levels further enhance this agility and quality.

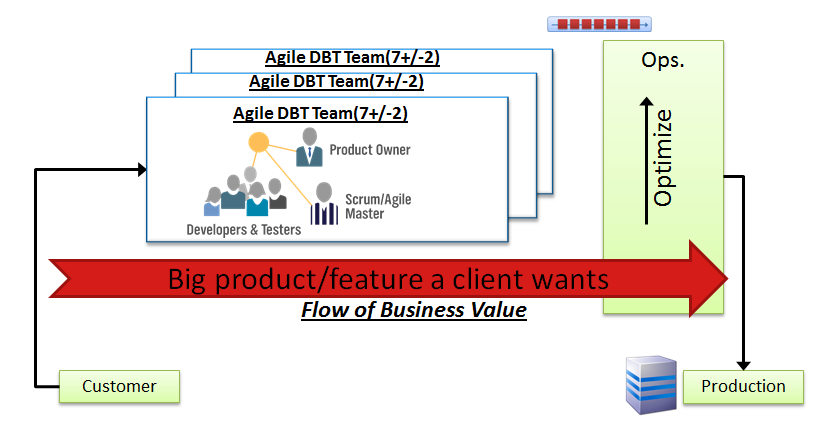

Hitting the Operations Wall

Once the development dysfunctions are addressed, these new cross-functional teams accelerate their rate of delivery and put substantial pressure on other parts of the system that are not tuned to the same velocity. Due to traditional management techniques (role specialization and command and control) as well as proprietary technology, operations teams are typically organized much the same way development teams had been organized, that is by role, not flow of value, as Figure 4 illustrates.

In the figure above, the Platform Architects make many of the same design decisions traditionally made by Analysis and Design groups in development. Operational Engineering then attempts to document these decisions in “work-plans” or “run-books” and these plans are executed by System Admins, Network Admins, Storage Admins or DBAs. The Product Operations groups specialize in the functionality of the product , but have limited understanding of the deployment technology or development decisions that brought it to this point. All this work is managed with work-plans between each group, creating queues and handoffs. The result is long wait times for the customer and downstream failures and misunderstandings of how to operate the software.

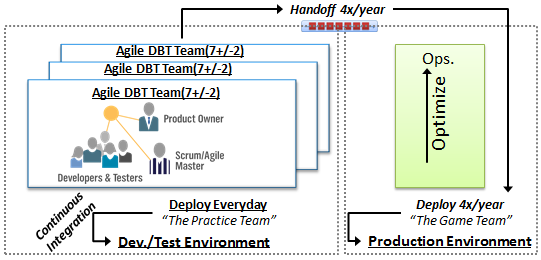

This is compounded by the fact that, in order to survive on a day-to-day basis and prevent delays, development teams deploy, manage and configure internal environments themselves. Tactically, this plan works to a point and these highly optimizing teams become very good at deployment and testing these environments on a daily basis. This process becomes the lifeblood of a development focused Continuous Integration Pipeline to enable their agility.

Organizational boundaries, environment ownership and role specialization can hold these lines in place creating another series of problems. In development, “The Practice Team” gets many attempts (perhaps many per day) in low-risk environments to perfect their processes and tools. In production, “The Game Team” gets very few attempts (4 per year) to perfect their processes and tools in a very different environment with different constraints. Note that these development environments are often largely incongruent with production lacking many of the production assets like security, firewalls, load balancers and SAN.

To use a sports analogy: These two teams are playing on different fields and running different plays but trying to score with the same ball. Imagine playing a sport and having two different teams: one team practices daily and the other a few times a year. When game time comes you always play the team that practices less. This is not a recipe for success in sports or software development operations. Practice makes you better, so why not practice early and often?

Continuous Delivery and Shared Operations Teams

In an ideal world, the teams that write the software would also be on the hook to run the software (see Netflix Operations: Part I, Going Distributed). Also ideal would be reducing feature delivery cadence to daily or a unit smaller than a PSI or multiple PSIs. We realize however that many large enterprises have complexities and dependencies that currently prevent us from reaching this goal. Additionally, most organizations will rightly fear to go fully decentralized with their operations processes and deployment of necessarily extremely reliable and secure production systems. Clearly, we still have a lot of work until both development and operations teams will be capable of deploying and operating quality systems. To do this, with a “dual team” role, requires commitment and concession from both sides (Development and Operations). The end goal is to give the Operations Teams as much exposure as early as possible to running the software before it goes into production. This exposure will force a reduction in the feedback loop lag time and also reduce the overall batch size of the handoff by introducing change early and often.

To do this, we provide four recommendations as discussed in the paragraphs below.

1. Designate a “Shared Operations Team (SOT)”

Designate a “Shared Operations Team (SOT)” to manage all environments per product/area (Development, Test, Pre-release, and Production). In SAFe, this may map to a Program or Release Train.

By having one team manage all environments we remove a large batch handoff that occurs towards the end of a PSI or a release. Operations teams become familiar with changes to the software and new expectations that may impact the operational footprint. This eliminates surprises and last-minute scrambles as well as building a stronger, shared understanding between development and operations.

While operations teams might argue that they cannot focus on the day to day needs of development, and development teams might argue that they need higher responsiveness and control, both concerns can be mitigated via automation and SLA/QOS guarantees. In reality, automation and daily optimization will eventually make the need for SLAs unnecessary, but putting them in place is a good start. Forming a SOT may also require moving people from development to operations or operations to development. We have seen two models of SOTs (development owned and operations owned), and either will yield benefits as long as the right people are put in place, and dedicated to SOT success.

2. Automate Everything and Deploy Daily

Automate everything and deploy daily using the same tools, processes and team that will deploy in production. Remove impediments and work them into the backlog.

Daily responsiveness (or hourly depending on level of maturity) will force the need for automation to come to the fore-front. Treat infrastructure as code, check it into source control and run it all the time. This embraces a key Continuous Delivery tenet: “If it hurts, do it more” and forces removal of impediments. Since an SOT will be the eventual team that executes the production install, they will get daily feedback on what works well or what does not. They will also be able to anticipate problems with the deployment pipeline that could cause issues with production constraints (security, change review processes, outage windows, etc.)

This pipeline becomes a continuous shared heartbeat for both the SOT and the development teams that rely on it. As such, the SOT and development will work quickly to prioritize any impediments into the Team or Program Backlog. It should not be understated how powerful this technique can be. Operations concerns that were often overlooked or hacked now come to the forefront daily and suffer the full brunt of many high-performing development teams and an SOT to remove. The SOT becomes an active, daily customer addressing priority concerns and escalating as needed. If something becomes painful today, the thought of doing it again tomorrow or in the next hour becomes unbearable. In the traditional hand-off, this pain comes only several times a year and it becomes the norm to exert heroic efforts at late hours to fix and then forget until the next release. When rolling out an SOT, it is important to budget spare capacity for addressing these needs until the end to end pipeline stabilizes.

3. Implement Environment Congruency

Make non-production environments as similar to production as possible without causing impediments.

Separated ownership makes it easy to short-circuit many of the constraints that exist in production. Development environments may also lack access to specialized hardware like load-balancers and firewalls. Production environments will also have strict controls around access rights (security) and times (outage windows). This lack of understanding and environment incongruence can cause massive downstream failure where development teams produce assets that cannot be deployed effectively.

Due to this, every attempt should be made to make the non-production environments as similar to production or emulate the production constraints in as many ways as possible. This will result in fewer downstream surprises. It will also drive automation concerns to places that development teams were previously unaware. In many cases, exposure to production-class environments will alter designs of the architecture to make them friendlier in these constrained or different environments. Everyone gets smarter from this approach.

As an example, we have experienced many cases where changes to database schemas are either 1) handed off to a DBA team to figure out or 2) automated but run on unrealistic volumes(100’s of MB vs. 100’s of GBs) and they fail to be deployed effectively. The traditional structures leave this as a late-night blame game between teams to unwind the mess. Advanced pipelines remove the need for DBAs in development through cross training and automate schema changes and execute them daily. This includes realistic load and volume testing against sanitized customer data ideally running migrations every day. The end result is that such a conversion can be run 100’s of times with realistic scenarios before seeing production traffic.

4. Cross Train Teams

4a. Cross Train Operations Personnel to Handle Multiple Roles

The traditional operations stove piping and reliance on “I-Shaped people” should be broken down. To do this requires operations personnel to be trained in more than one traditional role or discipline (SA, Network, SAN, DBA, and Deployment). All operational engineers should be trained to handle all basic tasks across the technology footprint: provisioning a VM, installing and configuring the OS, allocating IP addresses, configuring LUNs on the SAN, installing software and DBA tasks like configuration, backup and restore. These engineers will also need to go the next step and learn automation and programming. This vital skill will help them deal with the increased perceived workload by applying automation to time-consuming and mundane tasks. Queues, handoffs and delays will disappear and overall efficiency and throughput will skyrocket as work no longer sits in a role specific task queue waiting to be serviced.

The large downside of these “T-Shaped people” is that they can individually be more expensive. Traditionalists will use this fact against investment (“I can hire 2 SA’s for every multi-skilled Operational Engineer”). The fact is that with cross training and engineering skills, they can do orders of magnitude more work than their “I-Shaped” counterparts and also improve overall flow of work by removing queues and wait time. Besides the obvious business benefits, investing in cross-training for the willing is the right thing for their growth and down-right makes their work more fun. Who doesn’t want that?

4b. Cross Train Development Personnel on Operations Practices. Consider Rotation.

It is important that development personnel learn more about the operations considerations and environments that their code will ultimately run in. It also helps to give these engineers exposure to the customer support process and even field support calls about issues in production. Nothing can be more motivating and humbling to a developer than fielding a call from a frustrated customer about a software defect they created. A great way to facilitate this is through a rotation schedule where all developers get a chance to rotate through the operations and support group. This can be coordinated on a release or annual cadence. We have even found scenarios where some engineers prefer the day-to-day tactics of operations and support and thrive in this environment to become star performers. Inevitably, developers gain a much better understanding of the downstream impacts of the assets they create and this understanding influences the design and implementation of features in a hugely powerful way.

4c. Cross Train Operations Personnel on Development Practices. Consider Rotation.

The other side of the fence holds benefits too. Consider rotating operations personnel onto development teams. Have them bring their operational knowledge to the team as well as requirements and improvements they would like to see to streamline the pipeline. Use this as a cross training opportunity to beef up engineering skills for operational personnel by pairing on stories.

Summary

Traditional management techniques in large software organizations over-emphasize role specialization and command and control functional heirarchies. As software organizations unwind this dysfunction through adoption of Lean and Agile techniques, they soon hit barriers where flow in downstream organizations is not tuned to their rate of delivery. One common place for this impediment to occur is the handoff between development and operations at the PSI or Release boundary. To mitigate these issues, enable flow and enhance value delivery efficiency, we suggest deploying a Shared Operations Teams for each product/product area. These teams should be involved in the day to day deployment and management of the software to non-production environments, as well as managing the final production install and support.

Learn More

[1] Leffingwell, Dean. Agile Software Requirements: Lean Requirements Practices for Teams, Programs, and the Enterprise. Addison-Wesley, 2011.[2] Reinertsen, Don. Principles of Product Development Flow: Second Generation Lean Product Development. Celeritas Publishing, 2009.

[3] Humble, Jez, David Farley. Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation. Addison-Wesley, 2010.

[4] Larman, Craig, and Bas Vodde. Scaling Lean & Agile Development: Thinking and Organizational Tools for Large-Scale Scrum. Addison Wesley, 2008.

[5] Hackman, Richard, and Greg Oldham. Work Redesign. Prentice Hall, 1980.

[6] Davanzo, Sarah. Business trend: “E-shaped” people, not “T-shaped.” http://culturecartography.wordpress.com/2012/07/26/business-trend-e-shaped-people-not-t-shaped.

Last Update: 14 February 2013